PCI Device passthroughs does allow you to pass over things like your GPU for proper acceleration within your Virtual Machines, which makes it possible to run games and other graphic intensive tasks with no performance loss compared to your bare-metal installed Windows for example.

A single guide cannot possibly cover all the different systems that exist. My guide attempts to give you as much information as possible. There is a lot of information out there, and not all of it may be relevant to you and your system, do be sure to make some research of your own.

Important BIOS settings for getting things working properly!

Make sure your BIOS is on its latest update.

Enable VT-d

Required for passthrough to work at all; supported CPUs.

Disable CSM/Legacy Boot

Enable ACS

If present, set to Enabled (Auto doesn’t work).

Set PEG {NUMBER} ASPM to L0 or L0sL1

Set whichever amount you've got to mentioned, if L0 is present, pick that.

Enable 4G Decoding

Disable Resizable BAR/Smart Access Memory

AMD GPUSs (Vega and up) experience ‘Code 43 error’ if enabled.

Enable IOMMU

If present, mostly for AMD boards; supported CPUs.

Set Primary Display to CPU/iGPU

If your CPU has an iGPU, if not skip this.

Pre-Allocated Memory to 64M

If your CPU has an iGPU, if not skip this too.Setting the Boot arguments

Edit the Grub file

nano /etc/default/grubReplace the current similar line with this one in your grub file.

For Intel CPUs

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt initcall_blacklist=sysfb_init"For AMD CPUs

GRUB_CMDLINE_LINUX_DEFAULT="quiet iommu=pt initcall_blacklist=sysfb_init"Hit Ctrl + X -> Y -> Enter to save changes.

Your system might not be relying on Grub, but systemd instead, this is the case when you’re using ZFS.

Ignore if Proxmox was not set up with RAID/ZFS during setup.

Edit the systemd file

nano /etc/kernel/cmdlineFor Intel CPUs

root=ZFS=rpool/ROOT/pve-1 boot=zfs intel_iommu=on iommu=pt initcall_blacklist=sysfb_initFor AMD CPUs

root=ZFS=rpool/ROOT/pve-1 boot=zfs iommu=pt initcall_blacklist=sysfb_initHit Ctrl + X -> Y -> Enter to save changes.

Once you’ve made necessary changes to your system, we need to update the system file and reboot.

For GRUB systems

update-grubFor systemd systems

pve-efiboot-tool refreshFinally, reboot

rebootConfiguring IOMMU

Once the Host is up and running again, we need to verify that IOMMU is enabled.

For Intel CPUs

dmesg | grep -e DMAR -e IOMMUFor AMD CPUs

dmesg | grep -e DMAR -e IOMMU -e AMD-ViYou should be seeing something like this;

“DMAR: IOMMU enabled”, if not, go back and double check changes.

Then we need to enable necessary kernel modules, edit the following file

nano /etc/modulesAdd in these lines

vfio

vfio_iommu_type1

vfio_pciHit Ctrl + X -> Y -> Enter to save changes.

After changing anything modules related, you need to refresh your initramfs.

update-initramfs -u -k allNow check if remapping has been enabled.

dmesg | grep remappingShould output something like this;

For AMD CPUs

“AMD-Vi: Interrupt remapping enabled”

For Intel CPUs

“DMAR-IR: Enabled IRQ remapping in x2apic mode”

x2apic can be different on older CPUs, but should still work.

Only if you encounter issues, consider this;

You could also try adding in nox2apic to your Grub or Systemd args.

If your system doesn’t support interrupt remapping, you might want to allow unsafe interrupts.

Please be aware that this option might make your system unstable, but not necessarily.

Edit the following file

nano /etc/modprobe.d/iommu_unsafe_interrupts.confAdd in this line

options vfio_iommu_type1 allow_unsafe_interrupts=1Hit Ctrl + X -> Y -> Enter to save changes.

Checking IOMMU Groups

For PCI passthrough to work properly, you need to have a dedicated IOMMU group for each of the devices you want to assign to a VM.

To make sure that’s the case, enter the following command;

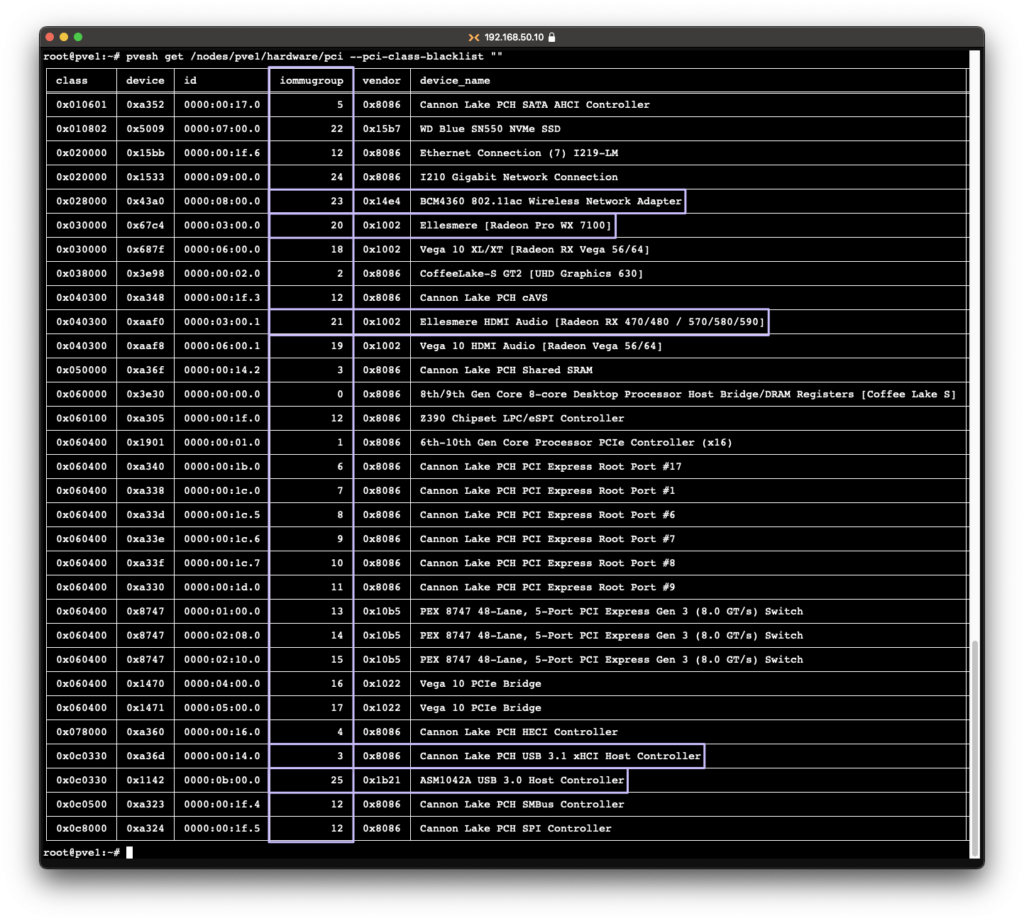

pvesh get /nodes/{nodename}/hardware/pci --pci-class-blacklist ""Replace {nodename} with the name of your Proxmox node.

This is an example of one of my nodes. As you can see, my GPUs, USB Controllers, Wireless adapter has their own dedicated group.

If your devices are in the same group, be sure to double check for ACS (Access Control Service) in your BIOS. Should you not find it anywhere, you can apply an override patch by including pcie_acs_override=downstream,multifunction in your grub or systemd file, if so go back to the step where we edit our said system file.

Blacklisting Modules

Blacklisting driver modules (drivers) and devices is recommended to give the VM(s) proper access to your devices, Proxmox might not always want to let go of one, causing them unavailable for the VM(s).

Let’s start off with the modules, edit the following file

nano /etc/modprobe.d/pve-blacklist.conf Add the following lines

blacklist nouveau

blacklist nvidia

blacklist nvidiafb

blacklist snd_hda_codec_hdmi

blacklist snd_hda_intel

blacklist snd_hda_codec

blacklist snd_hda_core

blacklist radeon

blacklist amdgpuHit Ctrl + X -> Y -> Enter to save changes.

Blacklisting Devices

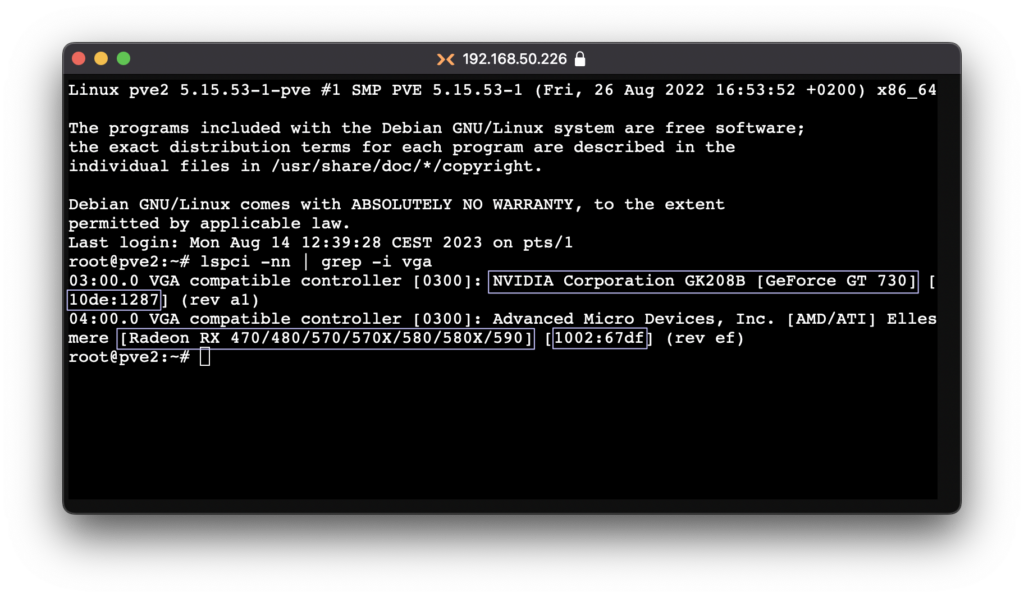

Finding the corresponding IDs for your PCI devices, run the following command

lspci -nn | grep -i {device}{device} = vga, nvidia, usb, audio, wireless, and so on. Don’t include the { } brackets.

You should then see a list similar to below;

“0x.00.x USB controller … [1234:5678]”

“0x:00.x VGA compatible controller … [1234:5678]”

“0x.00.x Audio Device … [1234:5678]”

Not necessary to take note of the GPU Audio ID.

Only do one device reference at the time, and take note of the IDs you need.

As you can see here, the ids for my GPUs are 10de:1287 and 1002:67d.

You might want to look for your USB Controller ids as well, if you encounter any issues with USB Passthrough down the line.

Keep yours noted for the next step!

In general it’s recommended to add the IDs for all devices you plan on using for a VM. What the blacklisting essentially does, is to block the devices for Proxmox holding them hostage, and not letting a VM have full access to it, at least in most cases.

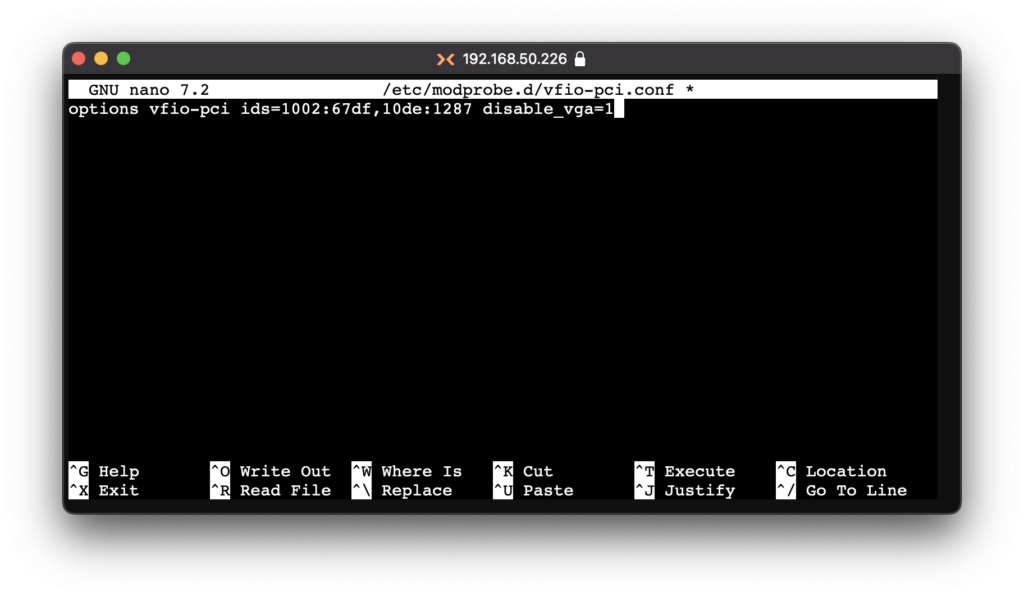

Blacklisting the PCI device IDs for the host, edit the following file

nano /etc/modprobe.d/vfio-pci.confAdd your Device IDs in this file like this;

options vfio-pci ids=1234:5678,1234:5678 disable_vga=1Hit Ctrl + X -> Y -> Enter to save changes.

Again, do not add the GPU Audio ID.

Note that adding disable_vga=1 here will probably prevent guests from booting in SeaBIOS mode, if you use that for anything.

You can also add disable_idle_d3=1 to the end of the line, essentially disables the D3 Device Power State; which will prevent certain hardware to enter a low-power mode, that might cause issues when some are passed through, read more here. Does no harm with disabling it, might only consume more power down the line. Has shown better stability for Thunderbolt cards for example.

Now when you got all that sorted, you want to reboot your Host machine once again.

rebootAdding your Devices to your VM(s)

This is the setting you want to have set for your GPU to fully work. For some, only All Functions and ROM-Bar can be ticked. Do your own experimenting with these options!

Some cards also requires a dumped .rom file for it to work. To acquire that you must dump the file yourself from your specific card, look for the guide further down in this post.

We recently discovered that if PCI-Express is not ticked, things like HDMI-Audio might not work. But do note that your install might not boot with it ticked, then have it un-ticked.

When your Host is up and running again, we can finally start adding in our accessories to our VMs! In this case, I’m using a macOS VM, it’s the same for Windows/Linux VM(s).

This is totally normal with the Console window whenever a GPU is added or Display is set to none.

So, make sure you got your monitor hooked up or at least a dummy plug so that you are able to remote into the VM, with your preferred Remote App.

This is the setting you want to have set for your GPU to fully work. For some, only All Functions and ROM-Bar can be ticked. Do your own experimenting with these options!

Some cards also requires a dumped .rom file for it to work. To acquire that you must dump the file yourself from your specific card, look for the guide further down in this post.

We recently discovered that if PCI-Express is not ticked, things like HDMI-Audio might not work. But do note that your install might not boot with it ticked, then have it un-ticked.

vBIOS Dumping (optional)

In some cases, you might have to dump your vBIOS, all depending on hardware. So continue on with the passthrough guide before doing this!

Rom-Parser

Dumping the GPU is simple. It gives us a few benefits. This lets us test if it is UEFI compatible. We can also use the dumped vBIOS file for our VM. Lets our VM have a preloaded copy of the GPU vBIOS.

Run each command below one by one, lets start with checking for updates, install dependencies and install rom-parser.

apt updateapt install gcc git build-essential -ygit clone https://github.com/awilliam/rom-parsercd rom-parsermakeNow you just need to dump the vBIOS. It gets dumped into /tmp/image.rom.

Double check your GPU bus ID.

cd /sys/bus/pci/devices/0000:01:00.0/echo 1 > romcat rom > /tmp/image.romecho 0 > romNow verify the vBIOS rom

./rom-parser /tmp/image.romShould output something similar as;

Valid ROM signature found @0h, PCIR offset 190h

PCIR: type 0, vendor: 10de, device: 1280, class: 030000

PCIR: revision 0, vendor revision: 1

Valid ROM signature found @f400h, PCIR offset 1ch

PCIR: type 3, vendor: 10de, device: 1280, class: 030000

PCIR: revision 3, vendor revision: 0 EFI: Signature Valid Last imageAMD Vendor Reset (optional)

It’s quite common for todays AMD GPUs to suffer from something known as AMD Reset Bug; which makes the card not able to reset properly, so it can only be used once per host power-on. When you try booting a VM the second time, it tries to reset and fails, causing the VM or your Host to hang.

The Vendor Reset project has made some patches to resolve that issue, read the github-page for more info and which GPUs are supported through this patch.

This issue is particularly problematic in cases where the system is equipped with only one GPU. This is due to the fact that the said GPU is designated as the primary one and is initialized by the host UEFI during the boot process, thereby rendering it unsuitable for passthrough, even on a single occasion.

Something similar to this dmesg output is usually what you’ll see when the reset issue happens.

pcieport 0000:00:02.0: AER: Uncorrected (Non-Fatal) error received: 0000:00:02.0

pcieport 0000:00:02.0: AER: PCIe Bus Error: severity=Uncorrected (Non-Fatal), type=Transaction Layer, (Requester ID)

pcieport 0000:00:02.0: AER: device [8086:3c04] error status/mask=00004000/00000000

pcieport 0000:00:02.0: AER: [14] CmpltTO (First)

pcieport 0000:00:02.0: AER: Device recovery successfulLet’s start off with installing required dependencies and Vendor Reset.

Make sure that you’ve got the latest kernel headers, check for updates:

apt install pve-headersIf it should fail, make sure that you’ve disabled the Enterprise repositories and enabled the Non-Subscription one.

Install the build dependencies:

apt install git dkms build-essentialPerform the build:

git clone https://github.com/gnif/vendor-reset.gitcd vendor-resetdkms install .Enable vendor-reset to be loaded automatically on startup:

echo "vendor-reset" >> /etc/modulesApply the changes to the Initramfs

update-initramfs -uReboot your Node to commit the changes

rebootWhen you start a VM with an AMD GPU, you will see messages in your dmesg output indicating that the new reset procedure is being used.

vfio-pci 0000:03:00.0: AMD_POLARIS10: version 1.0

vfio-pci 0000:03:00.0: AMD_POLARIS10: performing pre-reset

vfio-pci 0000:03:00.0: AMD_POLARIS10: performing reset

vfio-pci 0000:03:00.0: AMD_POLARIS10: GPU pci config reset

vfio-pci 0000:03:00.0: AMD_POLARIS10: performing post-reset

vfio-pci 0000:03:00.0: AMD_POLARIS10: reset result = 0Some tweaks might be necessary depending on the type of GPU you’ve got, you’ll need mount your EFI disk and open this file in your preferred OpenCore config editor.

AMD Users (macOS)

EFI/OC/config.plist

Mount your EFI drive and open the file in OpenCore Configurator.

Boot-args goes under NVRAM -> 7C436110-AB2A-4BBB-A880-FE41995C9F82

AMD RX 5000 and 6000 series requires the following boot-arg to get proper output.

agdpmod=pikera

Only use OpenCore Legacy Patcher if you got any GTX 600 or 700 series by Nvidia, as that series is the only ones compatible with macOS.

AMD RX 400/500 and RX 5000/6000 series does not require any patching, as they are native cards.

Nvidia Users (macOS)

EFI/OC/config.plistboot-args goes under

NVRAM -> 7C436110-AB2A-4BBB-A880-FE41995C9F82

amfi=0x80

AMFI is enabled

ngfxcompat=1

Force compat property missing

ngfxgl=1

Force OpenGL property missing

nvda_drv_vrl=1

nvda_drv(_vrl) variable missing

To solve the SIP error, change csr-active-config to 030A0000

Upon reboot, select Reset NVRAM from the boot-picker.

Reminder! ONLY use OpenCore Legacy Patcher if you got any GTX 600 or 700 series by Nvidia, as that series is the only ones compatible with macOS.

If you’re using OCLP and get these errors, this sorts that out;